LLM and RAG can answer questions that you have, like “What’s the standard operating procedure for working on a 737 aircraft wheel” You could guess or rely on what you already know, but you’d probably get better results by looking up a SOP documents or asking an expert. Now, think of artificial intelligence (AI) in a similar way. Two powerful tools Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) help AI give you smarter, more accurate answers. Let’s break them down in a way that’s easy to understand, even if you’re new to AI.

What Are Large Language Models (LLMs)?

An LLM is like a super-smart librarian who has read millions of books, articles, and websites. It’s a type of AI trained on massive amounts of text, so it understands language patterns, grammar, and even facts about the world. When you ask an LLM a question, it generates an answer based on what it “remembers” from all that text.

For example, if you ask, “Why is the sky blue?” an LLM might explain that sunlight scatters in the atmosphere, with blue light spreading out more than other colors. It doesn’t look up this information—it’s already stored in its “brain” from its training.

How LLMs Work (Simply):

- Training: The AI is fed billions of words from books, websites, and more. It learns how words and ideas connect.

- Generating Answers: When you ask a question, the LLM predicts the best words to use, one at a time, to form a response. It’s like autocomplete on steroids!

- Strengths: LLMs are great at understanding and generating human-like text. They can write stories, answer questions, or even help with homework.

- Limits: LLMs don’t always know the latest information (their “books” might be outdated), and they can sometimes make things up if they’re unsure. This is called “hallucination.”

Think of an LLM as a know-it-all friend who’s super confident but doesn’t always double-check their facts.

What Is Retrieval-Augmented Generation (RAG)?

Now, let’s add a twist. What if our librarian could not only rely on their memory but also quickly grab the latest books or articles to answer your question? That’s what RAG does for AI. RAG combines the power of LLMs with a system that searches for fresh, relevant information to make answers more accurate and up-to-date. LLM and RAG work together to improve the results.

How RAG Works (Simply):

- Step 1: Your Question: You ask something like, “What’s the latest news on electric cars?”

- Step 2: Retrieval: RAG searches a database, the internet, or a specific set of documents to find the most relevant and recent information. Think of this as the AI “Googling” trusted sources.

- Step 3: Augmentation: The AI takes this fresh information and feeds it to the LLM, saying, “Here’s the latest data—use it to answer the question.”

- Step 4: Generation: The LLM crafts a clear, accurate response based on both its own knowledge and the new information.

For example, if you ask about electric car trends in 2025, RAG might pull up recent articles about new battery technology, then let the LLM summarize them into a concise answer. This makes the response more reliable than if the LLM just guessed based on older data.

Why RAG Is Awesome:

- Up-to-Date Answers: RAG helps AI stay current by fetching the latest info.

- More Accurate: It reduces the chance of the AI making things up by grounding answers in real data.

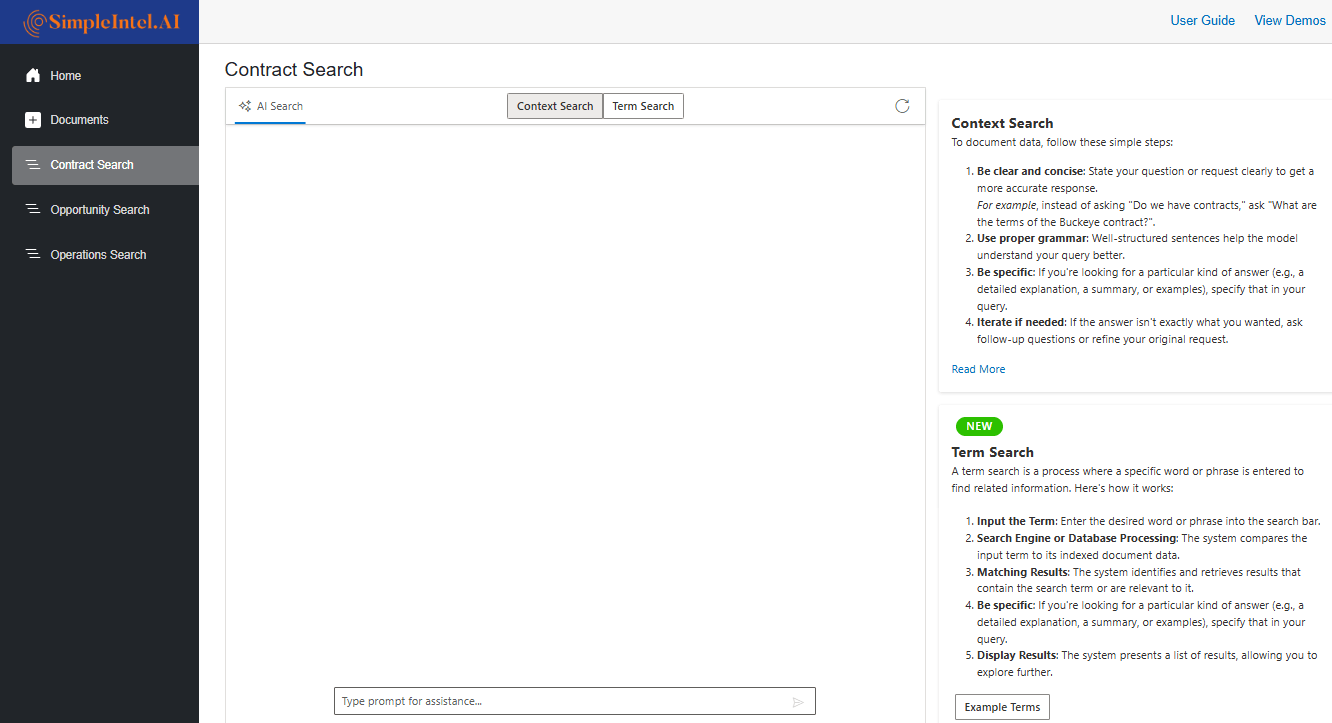

- Customizable: Companies can use RAG to search their own documents (like manuals or reports) to answer specific questions.

How Do LLMs and RAG Work Together?

Picture this: An LLM is a chef who knows tons of recipes by heart, but RAG is like a delivery service that brings fresh ingredients to the kitchen. The chef (LLM) can cook something tasty on their own, but with fresh ingredients (RAG), the dish is even better and more relevant to what you’re craving.

Here’s a real-world example:

- Without RAG: You ask an LLM, “What’s the weather like in New York today?” If the LLM was trained on data up to 2023, it might guess or say it doesn’t know.

- With RAG: The system pulls today’s weather report from a trusted source, feeds it to the LLM, and you get an answer like, “It’s sunny in New York today with a high of 68°F.”

Together, LLM and RAG make AI more like a helpful assistant who not only knows a lot but also checks the facts when needed.

Why Should You Care?

You might be thinking, “This sounds cool, but how does it affect me?” Here’s why LLM and RAG matter:

- Better Chatbots: Ever talk to a customer service bot that actually understands you? That’s likely an LLM with RAG, pulling answers from a company’s knowledge base.

- Smarter Search: Tools like AI-powered search engines or virtual assistants (think Siri or Alexa on steroids) use these technologies to give you accurate, current answers.

- Work and Learning: Students can get help with research, and businesses can use RAG to find answers in their own files—like instantly summarizing a 100-page report.

- Trustworthy AI: RAG helps AI avoid spreading misinformation by relying on real, verified data.

A Few Things to Keep in Mind

While LLM and RAG are powerful, they’re not perfect:

- Quality Matters: If RAG pulls from bad or biased sources, the answers might not be great. It’s like cooking with spoiled ingredients.

- Privacy: If you’re using RAG with private documents, you need to make sure the system is secure.

- Complexity: Setting up RAG requires tech know-how, so it’s not something you’d do at home (but companies like xAI are making it easier!).

Leveraging LLM and Rag

Large Language Models (LLMs) are like super-smart AIs that understand and generate human language, while Retrieval-Augmented Generation (RAG) makes them even better by adding fresh, reliable information to the mix. Together, they’re powering the next generation of AI tools that can answer your questions, help with work, or even spark creativity—all while being more accurate and up-to-date.

Next time you chat with an AI or use a smart search tool, you’ll know there’s a good chance LLMs and RAG are working behind the scenes, like a brainy librarian and a super-fast researcher teaming up to help you out.

If you’re curious to learn more about LLM and RAG or try other AI tools yourself, let us know to see these how these technologies can improve your business.

Learn More:

Nataero AI – https://simpleintel.ai/nataero-ai-software/

AWS LLM – https://aws.amazon.com/what-is/large-language-model/

Google RAG – https://cloud.google.com/use-cases/retrieval-augmented-generation